Design

Developing Functionality Using Business Rules

Building an application is both an art and science. Translating clinical best practices, crafting questions and answers, arranging a workflow and architecting a comprehensive logic model are the essential design components the citizen developer is responsible for on the EVAL platform. Fundamentally, every application must pass the following litmus test, “Will the data and business logic seamlessly integrate to form meaningful and actionable insights?” As a citizen developer your primary design objective is the transformation of raw data into actionable insights that conform to a coherent data and logic model. A system-based perspective will keep you grounded in your pursuit of data transformation.

Data Transformation

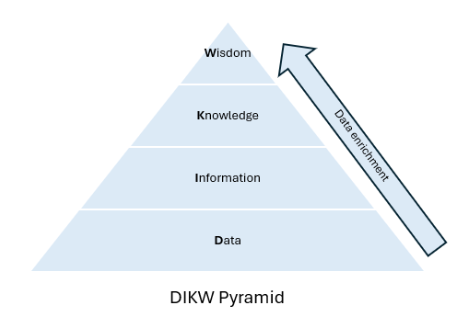

In isolation, data have little or no meaning. The task of the citizen developer is to collect, process and analyze data to generate information, which can then be used in the clinical setting to develop knowledge and wisdom. The DIKW pyramid is a hierarchical model that illustrates data transformation and represents the relationship between different levels of information processing. The process of data enrichment begins at the bottom and moves up with increasing value, to include hindsight, insight and foresight. As you move up the DIKW pyramid, information is data plus meaning, knowledge is derived by discovering patterns and relationships between types of information, and wisdom is understanding and internalization of knowledge patterns and relationships.

The four levels of the DIKW pyramid are:

Concept | Definition |

|---|---|

Wisdom | Understanding and internalization: |

Knowledge | Derived by discovering patterns and relationships between types of information: |

Information | Data plus meaning: |

Data | Little or no meaning in isolation: |

Once you have defined a problem with a well-defined user in mind and a clear objective, you can work with data to build a digital model that connects users to a higher order of meaning, patterns, relationships and understanding. Constructing a data model (like a concept map) is a common discipline in the software development cycle to align stakeholders around the why, how and scope of your data project and in turn promotes transparency, peer engagement and trust.

Modeling Data

By using data models, citizen developers and various stakeholders can agree on the data they’ll capture and how they want to use it before building. Like a blueprint for a house, a data model defines what to build and how, before starting construction, when things become much more complicated to change. Conceptualizing and visualizing how data will be labeled, captured, used and stored, and its relation to a result (e.g., recommendation or calculation) prevents design and development errors, capturing unnecessary data, and duplicating data in multiple locations. Additionally, connecting the model to objectives, user stories and business logic encourages analysis around the app's overall efficacy and impact. There are several free concept mapping tools online. The following data model examples below were developed using PowerPoint.

There is no standard format for conceptual models. It is simply a way to communicate and align stakeholders. Depending on your goals and the complexity of the project, data models may include three categories: abstract, conceptual and physical models. A conceptual model focuses on a high-level view of the app’s content, organization, and relevant business rules, and focuses more on the general workflow. A logical data model is based on a conceptual model and defines the project’s data elements and relationships. You will see the names and data attributes of specific entities that will make up the data source library of the app. A physical data model gets more technical and may be helpful when using spreadsheet-based calculations and conditional computations. For a deeper dive, Geoffrey Keating (2021, June 8) from Twilo provides a good overview. Let’s walk through a few data model examples.

Use Case: Perioperative Risk in Warfarin Patients

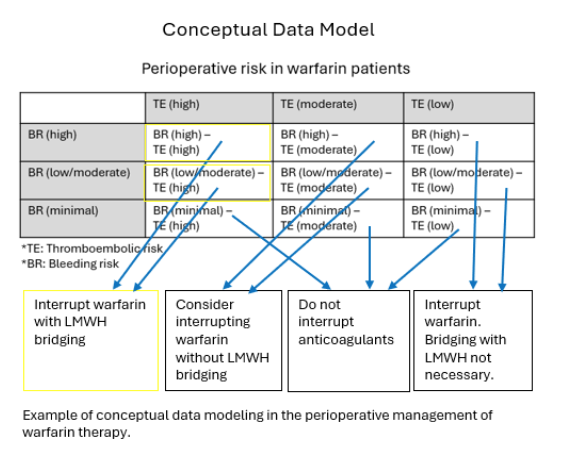

Below are examples of conceptual and logical data modeling in the perioperative management of warfarin therapy. Let's focus on one user story and a few business rules and how a conceptual data model might develop the story.

As a primary care physician, I want to determine if I should adjust my patient’s warfarin in the

periprocedural period so that the patient does not have a bleeding or thromboembolic event.

- If the antithrombotic is warfarin AND the bleeding risk is high AND the thromboembolic risk is high then interrupt warfarin with LMWH bridging.

- If the antithrombotic is warfarin AND the bleeding risk is low/moderate AND the thromboembolic risk is high then interrupt warfarin with LMWH bridging.

In the perioperative risk assessment of warfarin patients, three levels of thromboembolic risk and three levels of bleeding risk create nine risk profile combinations that trigger one of four results.

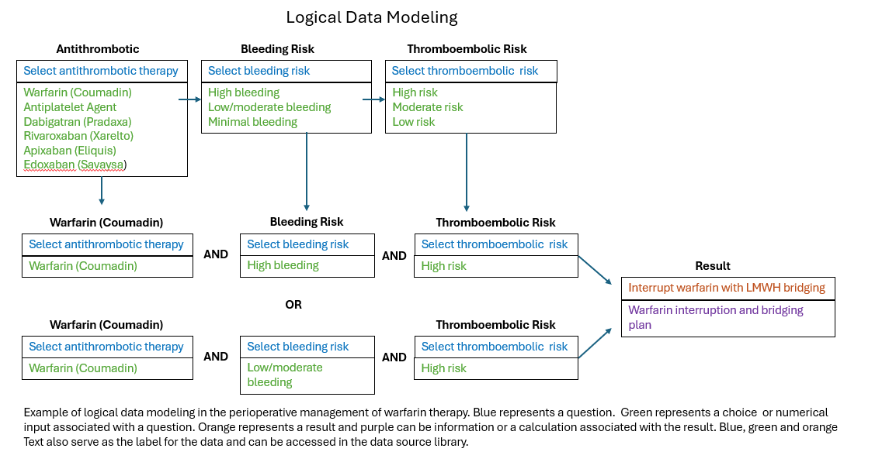

The two business rules stated above are highlighted in yellow. Taking the logic a step further, the data model below depicts how the data, for the two business rules described above, are structured in the app, as well as the logic workflow. The text in blue represents how the question will appear. The text in green represents choices as they will appear. In EVAL, the text also serves a label for that data and can be referenced in the data source library.

The next analysis step involves assessing the breadth and depth of the app builder tools required to automate the business logic and rules. The logic data model in the warfarin example is well organized and a rather simple problem to solve in a digital format. The following app builder tools are required:

- Set up the app as a decision tree workflow (questions appear one at a time)

- Construct three select one multiple choice questions

- Use visibility rules in each question to map the order the questions will appear

- Construct four results

- Each result will be set up in the information format utilizing visibility rules to map one or more of the nine risk combinations in the warfarin category.

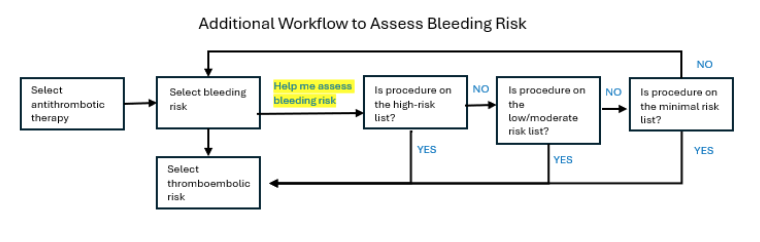

While the warfarin use case is straightforward, additional workflow considerations can provide a more sophisticated user experience but also add complexity to the logical data modeling. For example, addition of another workflow to help the user assess bleeding risk will require additional questions and visibility rules that integrate into the primary workflow as shown below.

Mapping out the workflow ensures that all options have a logical and meaningful outcome. Additionally, the expanded workflow generates additional data sources (e.g., “Is the procedure on the minimal risk list? > Yes”) that will need to be accounted for in the data model. Translating business logic into digital logic is an art, not everyone will approach or solve a problem the same way. What matters is coherence and traceability. To see how this workflow problem was solved, refer to the MAPPP app.

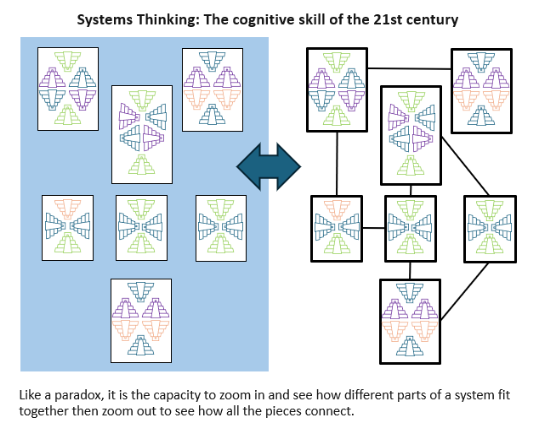

Analyzing Architecture: Why Citizen Developers Need to Adopt a Systems Point of View

In a world run by increasingly intricate and interconnected systems, the discipline of systems thinking has emerged out of a necessity to examine and simplify complexity, recognize patterns, and create effective solutions. MIT professor, Edward Crawley, dubs systems thinking “the cognitive skill of the 21st century” (MIT xPRO, 2022 September 14). Like a paradox, it is the capacity to zoom in and see how different parts of a system fit together then zoom out to see how all the pieces connect. Systems thinking offers a holistic approach to solution development.

The Solution is a System

The app itself is a system that delivers value to a user. Value is a product of intentional design and analysis which is fundamental to systems thinking. Understanding the principles of system thinking helps citizen developers understand the ‘what’ and ‘why’ of their actions, as well as the impact of those actions on those around them — which will drive better health outcomes.

- Systems Principle #1: For a system to behave well, teams must understand the intended behavior and architecture. Architecture demonstrates how the components work together to accomplish the system’s aim.

- Systems Principle #2: The value of a system passes through its interconnections. Those interfaces— and the dependencies they create— are critical to providing ultimate value. Continuous attention to those interfaces and interactions is vital.

Use Case: GFR Calculation

Let’s walk through an app designed to calculate glomerular filtration rate (GFR). This example combines development tools (e.g., user stories, business logic, data models) and a systems perspective to analyze how the components work together to accomplish the system’s aim.

Questions to consider when analyzing an app data and logic model:

- What patterns and relationships are highlighted to form meaningful and actionable insights?

- How does the data and business logic seamlessly integrate to form meaningful and actionable insights?

- What insights are offered?

- How do these insights provide value to the customer (i.e., end user, organization, patients, etc..)?

- How is data enriched to generate information, which can then be used in the clinical setting to develop knowledge and wisdom?

App Objective. Offer at least five different eGFR equations, that accommodate various labs (e.g., creatinine, cystatin C) based on availability as well as population and guideline preferences, to be performed by primary care clinicians or nurses during adult visits involving chronic kidney disease (CKD), including, 2021 CKD-EPI Creatinine, 2021 CKD-EPI Creatinine-Cystatin C, 2009 CKD-EPI Creatinine, 2012 CKD-EPI Cystatin C and 2012 CKD-EPI Creatinine-Cystatin C. Closely monitor eGFR preferences in the EVAL patient chart feature and gather clinician feedback to determine the top equations and potential adjustments (e.g., race) in future quarters.

User Stories. Here are two user stories to work from.

- As a nurse, I want to calculate the GFR using serum creatinine based on the 2021 CKD-EPI guidelines so that I can monitor changes in kidney function.

- As a nurse, I want to determine the CKD stage based on the 2021 CKD-EPI calculation so that I can notify the nephrologist for further management based on the stage of kidney disease.

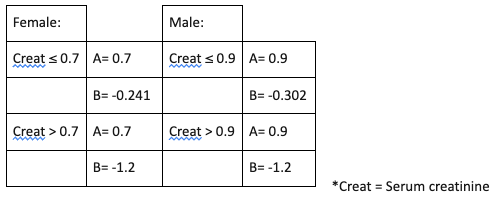

The 2021 CKD-EPI Creatinine Formula. The 2021 CKD-EPI guidelines published the following formula to estimate GFR.

- = 142 x (Creat/A)^B x 0.9938^age x (1.012 if female)

- A & B as follows:

Business Rules. The following business rules are required to operationalize the CKD-EPI formula.

- If female AND creatinine ≤ 0.7 then GFR = Round(142 (Creat/0.7)^(-0.241) 0.9938^Age _1.012)

- If female AND creatinine > 0.7 then GFR = Round(142 (Creat/0.7)^(-1.2) 0.9938^Age _1.012)

- If male AND creatinine ≤ 0.9 then GFR = Round(142 (Creat/0.9)^(-0.302) 0.9938^Age)

- If male AND creatinine > 0.9 then GFR = Round(142 (Creat/0.9)^(-1.2) 0.9938^Age)

- If GFR ≥ 90 then CKD stage I (Normal or high)

- If GFR 60-89 then CKD stage II (Mildly decreased)

- If GFR 45-59 then CKD stage IIIa (Mildly to moderately decreased)

- If GFR 30-44 then CKD stage IIIb (Moderately to severely decreased)

- If GFR 15-29 then CKD stage IV (Severely decreased)

- If GFR < 15 then CKD stage V (Kidney failure)

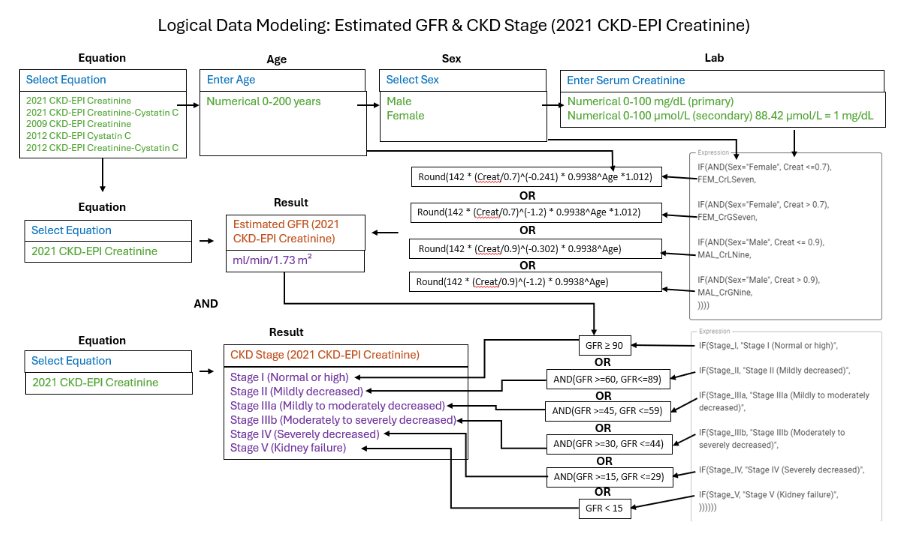

Logical Data Model. The logical data model below includes how the GFR equation and CKD stages will translate into spreadsheet-based language (Expression boxes) to automate the business rules.

The data model highlights several interconnections that are critical to providing value as stated in the objective and user stories.

- How sex and lab (serum creatinine) determine which expression is used to calculate GFR.

- How age and lab are used in the expression to calculate GFR.

- How the estimated GFR is used to determine CKD stage.

The interfaces described above and the dependencies they create form the building blocks of data enrichment. To see this workflow in action, refer to the CKD-EPI Equations for GFR app. Referencing the data model, it is also important to assess the level of analytical and operational skills required to accomplish the objective.

Tools and Skills. The core EVAL app builder tools and skills needed for the 2021 CKD-EPI Creatinine workflow include:

Setting up more than one unit of measure for a question

Using visibility rules to trigger a result

Working with multiple variables and setting up several calculations using spreadsheet-based formulas with these concepts:

- Arithmetic operators: 142 (Creat/0.7)^(-0.241) 0.9938^Age _1.012

- Math functions: Round(142 _ (Creat/0.9)^(-1.2)

- Conditional computations: IF(AND(Sex="Female", Creat <=0.7), FEM_CrLSeven,

- Numeric comparison operators: AND(GFR >=60, GFR<=89)

- Text functions: IF(Stage_V, "Stage V (Kidney failure)"

List of EVAL the app builder tools required to implement the 2021 CKD-EPI Creatinine workflow:

- Set up the app as a calculator workflow (all of the questions appear at the same time)

- Set up three questions

- The equation question is a select one with five options.

- The Age question is a number format with years as the unit of measure.

- The sex question is a select one with two options.

- The lab question is a number format with mg/dL as the primary unit of measure with an option to convert from a secondary unit of measure (µmol/L).

- Two results are created along with a visibility rule (Visible when equation 2021 CKD-EPI Creatinine is selected)

- Estimated GFR displays a numerical result in ml/min/1.73 m² and includes:

- Four named formula expressions (using arithmetic operators and math functions) to break up the large formula expression into easy-to-read names.

- Three data sources: Age, sex and creatinine.

- A formula expression using four conditional computations that will determine the correct named formula expression to use based on the business rules.

- Ensuring the primary unit of measure (mg/dL) for serum creatinine aligns with the result computation used (ml/min/1.73 m²).

- CKD stage displays one of six stages and includes:

- Six named formula expressions (numeric comparison operators) to break up the large formula expression into easy-to-read names.

- One data source: GFR (utilizes “Estimated GFR” result).

- A formula expression using six conditional computations and text functions that will determine the correct CKD stage to display.

Testing & Peer Review

In addition to data modeling, to thoroughly confirm and validate the technical specifications, testing is another proof of concept essential to achieving safe, high performing software. Software testing and peer review is an intrinsic part of software development life cycle that reveals issues, such as architectural flaws, poor design decisions, and invalid or incorrect functionality in the product. Over the years, software testing has become both a creative and intellectual activity for testers. Leveraging EVAL’s built in testing features and following a few tried and true testing principles will enable citizen developers and organizations to evaluate the software’s functionality, specification and performance and ensure high-quality standards. Two types of testing will be discussed, testing automation and manual testing. Both methods are important in the context of peer review.

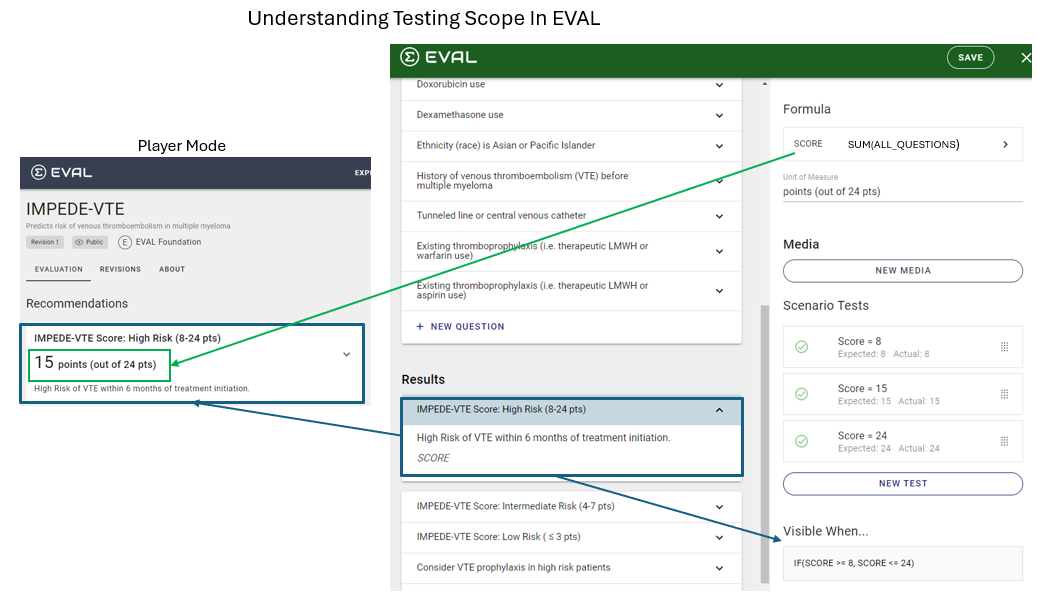

Automated Testing. The EVAL builder mode includes automated testing scenarios. As you construct your workflow (e.g., questions, answers, formulas, visibility rules), each result includes a feature to set up a test scenario to test the workflow(s) feeding into the result. The test scenario in the result auto populates with a sequence of questions and answers from the app workflow. You simply select the answers or enter the inputs to test a workflow path associated with the result. EVAL displays the result the user will see and displays a validation (green checkmark) if your answers and subsequent computations match the workflow(s), formulas or visibility rules mapped to that particular result. Click here and scroll down to scenario tests for more information.

Manual Testing. During construction of an app and upon completion, it is important to evaluate the app in the player mode prior to publication. Referencing the data models created for the app, manually test every workflow path and validate the results. Look for grammatical, computational or workflow errors and evaluate the app for usability and alignment with the app objectives. Include additional content in the “About” section of the app to describe the app, provide research background and illustrate how it works. Then, gather feedback from a group of end users and stakeholders as they “look under the hood” in the builder mode (no need to give editing permissions), review the “About” section, and also perform manual testing.

Testing Principle #1: Exhaustive Testing is Impossible.Inputs and outputs can have an infinite number of combinations making 100 percent testing of software functionality, from every possible perspective, a waste of resources without affecting the overall quality of the product.

Test Scenarios for Each Visibility Rule. Most software is tested with a defined set of test cases. If the test cases pass, it is assumed that the software is correct and will produce the correct output in every test case. Decision tree apps not using numerical inputs are typically easier to test. As a general rule in decision trees, it is recommended to set up a test scenario for each visibility rule within a result.

Test Scenarios That Satisfy Edge Cases. When an app includes numerical inputs and calculations, set up test scenarios with edge cases for each result. If there are no visibility rules or conditional computations associated with a calculation then select common edge cases you would expect in clinical practice. Keep in mind that a result can be set up to display both information based on visibility rules and the results of a formula calculation. You will want to set up test scenarios that satisfy edge cases for both the visibility rule and the formula. When setting up test scenarios, create a unique label which also serves as a descriptor of the test scenario. Test labels help readers understand the scope of testing in the context of a given result. Here is an example of scenario tests for a points based app called IMPEDE-VTE Score.

In this app, the result called, “IMPEDE VTE Score: High Risk (8-24 pts)” is visible when the score is between 8-24 points. When this result appears a score is also calculated and displayed. Test scenarios include the edge cases for 8 points and 24 points which passed with a green checkmark. There are two different tests automatically occurring for this result. Notice the expected and actual values which match for each test scenario. This exercise validates the formula is working. In order to get a green checkmark, the test scenario must also stay within the visibility rule of a score between 8 and 24 points. In other words, if a test scenario was run that resulted in a score of 25, the test would fail even if the calculation was correct and vice versa. Since these edge cases pass, it is assumed the test cases in between will also pass without the need to set up a test case for every possibility. Imagine having to set up test cases for a visibility rule of 50 to 1000 points. The rigor of automated testing combined with manual testing and peer review will allow your team to meet industry testing standards.

Testing Principle #2: Begin Testing Early to Deal With Problems as Early as Possible.As you build interdependencies within the app, you want to be sure the foundational layers are working as expected.

Interconnected and Dynamic Testing Automation. Every attempt to test a program during the lifecycle not only identifies flaws but also encourages ways and means to improve the effectiveness, usability and accuracy of the app. A “test as you go” approach allows you to validate your work layer by layer. You can test as you build each result and you can add or review tests as you build subsequent results. Automated test scenarios are interconnected with other results and will display all the results a tested workflow can generate but only validate the result you are testing under. The automated tests are also dynamic. If you test as you build each result or change the workflow, the test scenarios will also automatically adapt to those changes — which can alter the test scenario validation. As a rule of thumb, it is wise to go back to your test scenarios and check validation before publication.

Testing Principle #3: Testing Reveals Defects not Proof of Their Absence.Testing is an opportunity to reduce the number of undiscovered defects but finding and resolving issues does not make the program 100% error-free.

Anticipate Future Problems. Once an app is published, vigilance and monitoring is strongly recommended. Anticipating potential issues that may arise also mitigates future problems. One example might be monitoring embedded apps for any updates, such as release of a new version or deprecation of an app.

Testing principle #4: Defect Clustering Involves Prioritizing Testing of App Components that contain Most of the Issues.Derived from the Pareto principle of 80:20, the assumption is 20% of the components constructed in the app cause 80% of the defects and issues.

Assess Vulnerabilities. In the previous section (Assessing and Managing Complexity) we discussed the following factors that complicate data models and contribute to app complexity.

- Number of data sources (Questions and choices)

- Type of data source (Select one, Select multiple, Numeric or spreadsheet-based formula variables)

- Number of conditions

- Advanced spreadsheet-based formulas, functions and conditional computations

- Embedded apps

With these factors in mind, a thorough analysis of the data modeling can help you identify the more complex aspects of the app worthy of a more concentrated testing effort involving strategic edge cases.

Testing Principle #5: Defect Clustering and its Eradication Leads to the Pesticide Paradox.The pesticide paradox involves using repetitive tests to get rid of clustering which in turns makes it difficult to detect new defects — a sort of developed immunity to your testing strategy due to over saturation.

Testing Variation is Key. To overcome the pesticide paradox, reviewing and revising test scenarios and adding varied test cases will help overcome this issue. When setting up multiple test scenarios within a result, avoid the temptation to repeat a limited range of answers. Try to vary the combination of answers as much as you can within the boundaries of a given result.

Testing Principle #6: Testing is Context-Dependent.Every app is heterogenous and has its own identity.

Create Test Scenarios With the End User’s Perspective in Mind. Context dependence means different types of applications require different testing techniques. Delivering value to a user is dependent on app design, and delivering value is testing around a set of use cases. Think of context as a way to customize a value proposition. Delivering value starts by identifying a need (business rules) within a specific context (user and setting) along with the driver of that need. A user story connects context and action to value which ought to reflect in the app design. Testing must include the end user perspective and how users will use the application.

User Story:As a dialysis nurse, I want to calculate the GFR using serum creatinine based on the 2021 CKD-EPI guidelines so that I can monitor changes in kidney function.

Testing includes evaluating if the app design is consistent with its proposed value. In addition to setting up test scenarios, employing a manual testing strategy during peer review will offer depth to your analysis and inject testing variety with the end user’s perspective in mind. When conducting testing, consider the following questions:

- Can users easily navigate the workflow without difficulty or confusion?

- Does the app workflow support current practice workflow?

- Are inputs and outputs representative of the context?

- What are the most common inputs or input ranges for this population?

- Are there outliers that are not accounted for?

- Does the app satisfy the stated business requirements for a defined user?

- Will users accept or reject the results produced by the app? Who and Why?

The Enterprise Building the App is a System, Too

There is another layer to systems thinking that acknowledges the impact of another system — the people and processes of the organization that builds the application. Understanding how multiple systems interact helps leaders and teams navigate the interconnected complexity of solution development, the organization, and the larger picture of implementation and adoption. The citizen developer will often need to contend with and manage influences and expectations from several systems and consider the impact at every stage, including app planning, design, development, publication and maintenance. Additional systems principles can guide leadership initiatives.

- Systems Principle #3: Building in the context of complex systems is a social endeavor. Cultivating an environment of collaboration provides insight on the best way to build better systems.

- Systems Principle #4: Subject matter experts and end users are integral to the development value stream. Forging partnerships based on a long-term foundation of trust optimizes value.

- Systems Principle #5: The flow of value through an enterprise requires a cross-functional approach. Optimizing one component does not optimize a system. Accelerating flow requires eliminating functional silos and creating cross-functional organizations.

- Systems Principle #6: A system can evolve no faster than its slowest integration point. The faster the full system can be integrated and evaluated, the quicker the system knowledge grows. Practice-based research networks (PBRNs) are a good example of creating low friction systems at the point of care that foster a continuous learning culture.

Updated about 1 year ago